High-Bandwidth Memory (HBM) Is Sold Out. So What’s Next?

HBM, a chip technology that plays a central role in today’s AI systems, is expected to remain in short supply for the foreseeable future.

By Mark LaPedus

High-bandwidth memory (HBM), a chip technology that plays a central role in today’s AI systems, is expected to remain in short supply for the foreseeable future, possibly until 2026.

Two HBM suppliers, Micron and SK Hynix, are sold out of these memory chip products. Samsung, the other HBM supplier, is struggling with the technology. Besides the supply issues, prices are increasing for DRAMs, the key chips used for HBM.

A DRAM is a memory chip, which is widely used in computers, smartphones and other products. DRAMs provide the main memory functions in systems. In operation, these chips enable a quick and short-term access to the data.

Memory companies manufacture DRAM chips in large facilities called fabs. For HBM, a DRAM supplier takes a standard DRAM, and vertically stacks four, six or eight of these memory chips on top of each other. The DRAM dies are connected using tiny vertical wires called through-silicon vias (TSVs). The TSVs provide the electrical connections between each die.

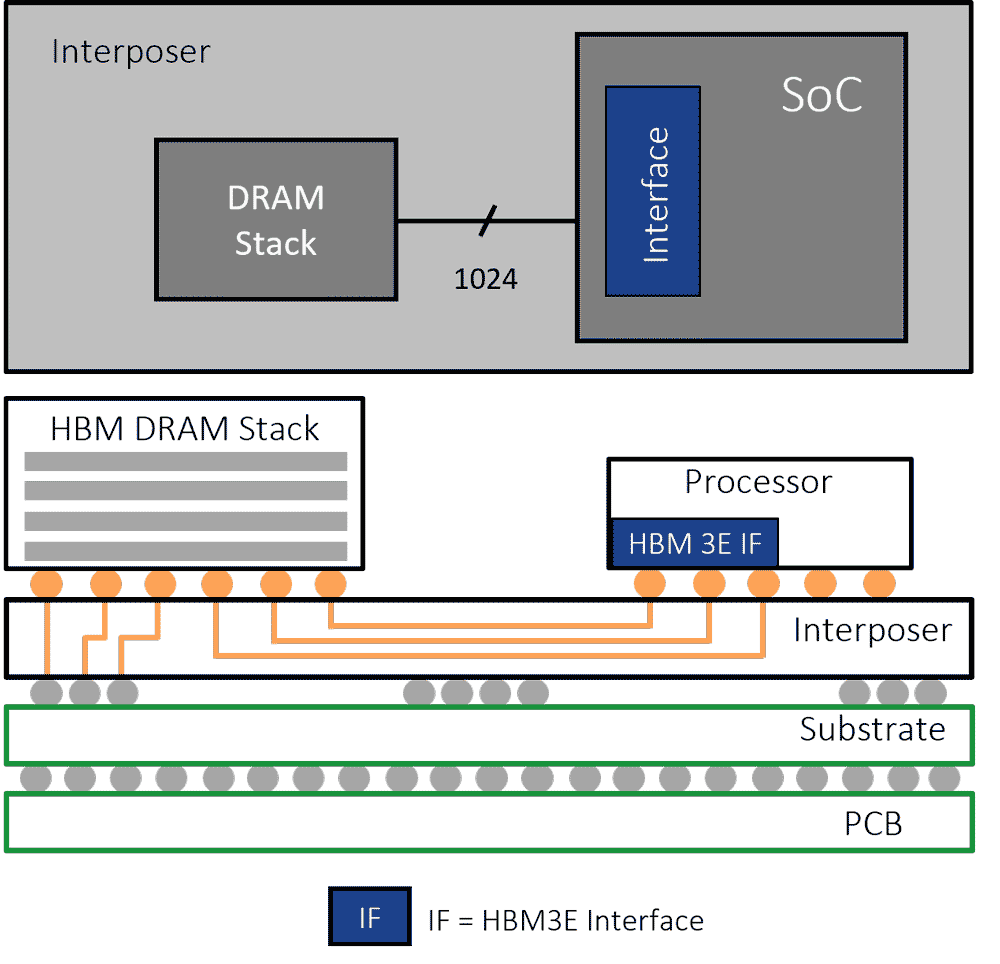

HBM is a specialized memory device used in high-end systems, such as servers and supercomputers. In a high-end system, a processor chip and an HBM device are often assembled together and electrically connected in a unit called an IC package. In operation, HBM enables the data to transfer between the memory and processor at high speeds. HBM employs a 1024-bit memory bus, which is wider than standard DRAM. The wide bus enables high bandwidth in systems.

Two views of HBM. Bottom diagram: An HBM DRAM stack and a processor are situated on an interposer in the same package. HBM and the processor are electrically connected to each other. Top diagram: A high-level view of an HBM DRAM stack connected to the processor. Source: Rambus

HBM isn’t new. Used in servers for several years, HBM was once viewed an expensive niche technology. HBM is still expensive today, but thanks to AI, the technology is moving into the limelight.

The latest AI technology, called generative AI (GAI), is taking off. In large data centers, specialized AI servers are running the latest GAI algorithms like ChatGPT. AI servers incorporate high-speed AI chips, known as GPUs, from Nvidia and others. Nvidia’s latest GPU architecture utilizes eight HBM stacks.

Demand for Nvidia’s GPUs, as well as HBM, is skyrocketing. Nvidia doesn’t make DRAM or HBM, but rather these devices are manufactured and supplied by three memory companies--Micron, Samsung and SK Hynix.

Thanks to the AI boom, South Korea’s SK Hynix, the world’s largest HBM supplier, is sold out of these products for 2024 and most of 2025. The same is true for U.S.-based Micron. Micron and SK Hynix are the key HBM suppliers to Nvidia.

South Korea’s Samsung is also shipping HBM. So far, though, Samsung’s HBMs have been unable to pass the certification process at Nvidia. Samsung, according to reports, is struggling with various yields issues with HBM. “I expect Samsung to contain the issues through screening short term, and then to resolve the issues, and for Samsung to be a significant supplier of HBM to Nvidia long term,” said Mark Webb, a principal/consultant at MKW Ventures Consulting.

Besides the supply issues, other events are taking place in the HBM world, including:

*Suppliers are selling HBM devices, which stack up to eight DRAM dies on top of each other. Vendors are ramping up 12-die high HBM products with 16-die in R&D.

*Today’s HBM devices are based on the HBM3E standard. HBMs based on the next-generation HBM4 standard are in R&D.

*China, which is behind in HBM, is working on the technology.

Data explosion

DRAM suppliers are racing each other to ship the next wave of HBM products—and for good reason. Demand, as well as the profits, are enormous. In terms of sales, the worldwide DRAM market is projected to reach $88.4 billion in 2024, up 83% from 2023, according to Gartner. HBM represents a small part of the overall DRAM market, but that’s changing. HBM is projected to account for 21% of the total DRAM market value in 2024, up from 8% in 2023, according to TrendForce.

HBM, along with GPUs and other chips, are playing a big role in today’s booming digital information age. In 2024, the world is expected to generate 1.5 times the digital data as compared to 2022, according to KKR, a global investment fund.

In many cases, the data is processed and distributed in large facilities called data centers. A large-scale facility, called a hyperscale data center, is a building that contains at least 5,000 servers and occupies 10,000 square feet of physical space, according to IBM. A single facility draws over 100 megawatts of power.

That’s just one facility. Amazon, Google, Meta, Microsoft and others own and operate data centers throughout the world. These companies are referred to as cloud service providers (CSPs).

Meanwhile, a server, or computer, comes in different sizes and configurations. Each server consists of a power supply, cooling fans, network switches, and, of course, various chips. In data centers, the majority of servers are general-purpose systems that process various data.

For data-intensive AI workloads like ChatGPT, CSPs tend to use AI servers. An AI server, a subset of the overall server market, is a souped-up system with the latest chips. For example, Dell’s new AI server, called the PowerEdge XE9680L, supports eight of Nvidia’s GPUs in a small form factor with direct liquid cooling (DLC).

To operate and maintain the servers and other equipment, CSPs require an enormous amount of power. Driven by AI, global data center power demand is projected to more than double by 2030, according to Goldman Sachs. Some 47 gigawatts of incremental power generation capacity will be required just to support the data centers in the U.S. alone through 2030, according to the firm.

CSPs have known about the power problems for years and are working on solutions. The semiconductor industry is playing catch-up.

DRAMs to HBMs

Meanwhile, servers, as well as PCs and smartphones, have the same basic architecture. A system incorporates a board. A central processing unit (CPU), memory and storage reside on the board.

The CPU processes the data. Two components, solid-state drives (SSDs) or a hard disk drive, provide the data storage functions. SSDs incorporate NAND flash memory devices. NAND is a memory chip type, which is non-volatile. That means a NAND flash device still retains the stored data, even when you turn off a computer.

In a system, DRAM is used for the main memory functions. DRAM is volatile, meaning the data is lost when the system is shut down. Plus, DRAMs require a periodic refresh operation to prevent data loss, which in turn causes unwanted power consumption and delays.

“DRAM is made of transistors and capacitors,” explained Benjamin Vincent, a senior manager at Coventor, a Lam Research Company, in a blog. “A transistor carries current to enable information (the bit) to be written or read, while a capacitor is used to store the bit.”

DRAMs aren’t new. In 1966, IBM’s Robert Dennard invented the DRAM. In 1970, Intel introduced one of the first commercial DRAMs, which boasted a capacity of 1 kilobit.

For decades, DRAMs have been the workhorse memory devices in systems. They are cheap and reliable. And suppliers continue to develop new and more advanced devices. Last year, for example, Samsung introduced a 32-gigabit (Gb) DDR5 DRAM, a solution that will enable DRAM modules with up to 1-terabyte of capacity.

DDR (double data rate) is memory technology that speeds up the transfer of data between the memory and processor. Today’s DRAMs are based on the fifth generation DDR standard, dubbed DDR5. JEDEC, a standards body, updated the DDR5 standard, extending the data rates from 6400 million transfers-per-second (MT/s) to 8800 MT/s.

That’s still not fast enough. In a system, the goal is to move the data to and from the processor and memory. But as the system becomes inundated with more data, the data transfer rates may take too long, causing unwanted latency.

With all of this in mind, CSPs face a number of challenges. CSPs must not only keep up with the onslaught of data, but they need to deal with the power consumption problems.

So, what are the solutions? CSPs could upgrade the data center with new servers, networks and cooling systems. They also need help from the semiconductor industry. In response, DRAM vendors have developed new and advanced products at lower power.

Another solution is to move the DRAM closer to the processor, thereby speeding up the data transfer rates. For years, the semiconductor industry has been developing a variety of solutions here. For example, several vendors are working on compute-in-memory (CIM) or processor-in-memory (PIM) devices. The idea is to combine the processing and memory functions in the same chip. But that’s been a difficult technology to conquer and is still a work in progress.

Several years ago, the industry came up with another idea—assemble logic and memory dies in the same IC package. A package is a product that encapsulates the dies, and protect them from harsh operating conditions.

Over the years, the industry has developed a plethora of IC package types. One type, called 2.5D, appears to be the winner for high-end systems, at least for now. In one 2.5D configuration, an FPGA or processor die is placed on an interposer in the package. Then, a compute die is placed on the interposer, next to the FPGA or processor in the same package.

A stack of DRAM dies is placed on top of the compute die. The DRAM stack is known as HBM. The interposer, which acts like a silicon interconnection pad, consists of TSVs. The TSVs provide the connections between the FPGA or processor to the board. (See diagrams above)

In the 2.5D package, the chips operate as though it’s a single device. Plus, the FPGA or processor is next to the memory, enabling faster data access between the two chips. This is accomplished by tightly coupling the HBM memory with the compute die via a distributed interface. “This interface is split into multiple independent channels, which may operate asynchronously,” according to Synopsys in a blog. “Each channel interface features two pseudo-channels with a 32-bit data bus operating at double data rate (DDR). Every channel provides access to a distinct set of DRAM banks.”

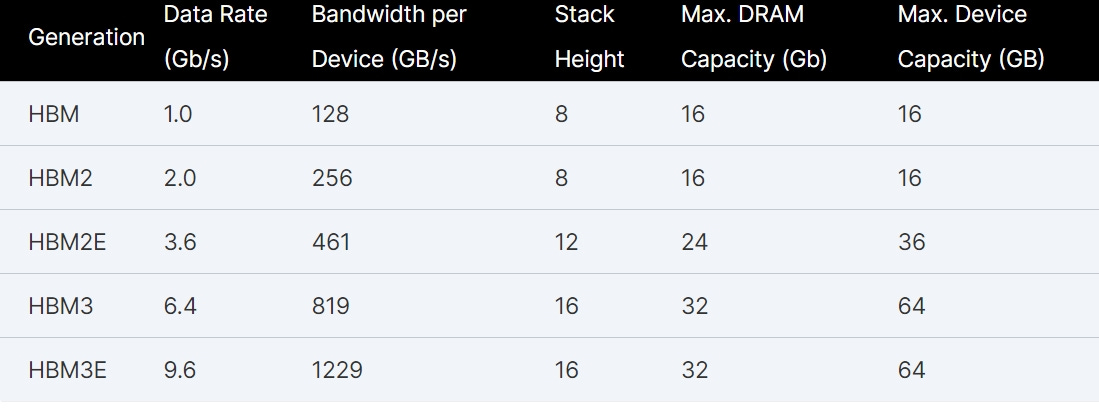

In 2013, SK Hynix introduced the first HBM device. The first HBMs were based on the HBM1 standard. Specified at a 1 Gb/s data rate, HBM1 allowed vendors to stack up to eight 16Gb DRAM dies.

HBM2, the second HBM standard, appeared in 2016, followed by HBM3 in 2022. Today, vendors are shipping HBM devices based on the HBM3E standard. Specified at a 9.6 Gb/s data rate, HBM3E enables vendors to stack 16 32Gb DRAM dies.

“HBM3E/3 decreases core voltage to 1.1V from HBM2E’s 1.2V,” according to Rambus in a blog. “Also, HBM3 reduces IO signaling to 400mV from the 1.2V used in HBM2E. Lower voltages translate to lower power. These changes help offset the higher power consumption inherent in moving to higher data rates.”

JEDEC will soon release the specifications for the next-generation HBM4 standard. HBM4 products are due out in 2026.

HBM specs. Source: Rambus

HBM supply and demand

Thanks to Nvidia, demand is overwhelming for HBM. In 2022, Nvidia introduced the H100, a GPU that consist of 80 billion transistors. The H100 architecture includes five HBM sites. Today, Nvidia is ramping up Blackwell, a new GPU architecture that consists of 208 billion transistors. This architecture makes use of eight HBMs.

With each new product introduction, Nvidia is increasing the HBM memory capacity. “Nvidia is releasing a new product or architecture every year. With these releases, they are approximately doubling the memory each time,” said MKW Ventures’ Webb.

To meet the demand, DRAM suppliers are expanding their production of HBM products. By year’s end, the DRAM industry is expected to allocate roughly 250K/m (14%) of total fab capacity for HBM, representing annual supply bit growth of 260%, according to TrendForce.

For now, though, suppliers are sold out of HBM products. “I am not convinced that ‘sold out’ is the best term. I would say that companies have orders through ‘25. They have orders for everything they can make for the next two years,” said MKW Ventures’ Webb.

The demand is driving up prices for server DRAM. Average contract prices for server DRAM are expected to rise by 8%-13% in the third quarter of 2024, compared to the previous period, according to TrendForce.

This could all change. “I would say there’s still some possibility that (HBM) orders won’t materialize and that people are pre-ordering based on plans and potentially double ordering or overstocking,” said MKW Ventures’ Webb.

There is another scenario. If the AI market slows, Meta, Microsoft and others could push out--or even halt--their server orders, which would impact chip vendors.

HBM or bust

As stated, memory makers produce DRAMs in a fab using various process steps. At each new generation, the DRAM is becoming more difficult to manufacture in the fab. The challenge is to reduce the size of the tiny capacitor in the DRAM at each generation.

For HBM, memory makers take standard DRAM, and then stack the devices and connect them using TSVs. To make HBM devices, the process steps are different than making DRAM.

The HBM process steps are well known and established. But at each generation, memory makers are dealing with new and more complex DRAMs. That adds complexity to the HBM manufacturing process.

Plus, the die sizes for HBM are 35%-45% larger than DDR5 DRAM, according to Avril Wu, an analyst at TrendForce. In addition, the yield rates for HBM are 20%-30% lower than DDR5 DRAM, Wu said.

So, who’s doing what in HBM? Today, Micron, Samsung and SK Hynix are shipping HBM3E products that support 8 DRAM die stacks. Vendors are now sampling HBMs with 12 DRAM die stacks. The 12-die products present some challenges. “The thermal issues on eight are very challenging. The 12-stack products will be even more challenging, but are being addressed,” Webb said.

Meanwhile, last year, SK Hynix announced what the company claimed was the industry’s first 12-layer HBM3E product with a 24GB memory capacity.

Earlier this year, Samsung began sampling a 12-stack HBM3E product. With a capacity of 36GB, Samsung’s product provides a bandwidth of up to 1,280 gigabytes per second (GB/s).

Not to be outdone, Micron is sampling a 36GB 12-die HBM3E, which is set to deliver greater than 1.2 TB/s performance. In the meantime, Micron is seeing huge demand for its current HBM products.

“Our HBM shipment ramp began in fiscal Q3, and we generated over $100 million in HBM3E revenue in the quarter,” said Sanjay Mehrotra, president and chief executive at Micron, in a recent conference call. “We expect to generate several hundred million dollars of revenue from HBM in fiscal 2024 and multiple billions of dollars in revenue from HBM in fiscal 2025.”

Others are also pursuing HBM, particularly China, which doesn’t have a presence here. ChangXin Memory Technologies (CSMT) and Huawei are separately developing HBM, according to reports. China appears to be developing older HBM2 products.

So where is this all leading? “We are in the early innings of a multiyear race to enable artificial general intelligence, or AGI, which will revolutionize all aspects of life. Enabling AGI will require training ever-increasing model sizes with trillions of parameters and sophisticated servers for inferencing. AI will also permeate to the edge via AI PCs and AI smartphones, as well as smart automobiles and intelligent industrial systems. These trends will drive significant growth in the demand for DRAM and NAND,” Micron’s Mehrotra said.

Let’s not forget GPUs and other chips too. The question is how long the AI boom will last and what are the future ramifications of the technology.